I started with pixels about 2 years ago when I first saw Matt Johnson’s (www.livinglightshows.com / johnsonfamilylightshow.com) so I’m in now way an expert but I thought I’d write this to see if it helps others.

I’ve been watching the Official Xlights Forum on Facebook and seen many people have had the same questions I did. This post is an attempt to try and help all of you on your pixel journey. Below are a few of the terms explained in I hope an easy manner.

Universe

This term refers to the an addressable space – for most controllers it will be 510 channels or 512 channels. This numbering sequence comes from the DMX protocol which normally supports 512 channels in each universe

Channels

This seems to confuse so many people so I’ll try to make it simple.

Firstly you need to check what type of lights/pixels you have, if they are RGB, Single Colour or RGBW. Once you know this it is normally quite easy to determine the channel.

A channel represents an address per pixel – for RGB you’ll have 3, one for red, one for green and one for blue. For RGBW you’ll have 4 channels, for single colour you’ll generally have one channel (think of on/off for single colour)

When trying to determine the channels you need in a prop it is imporant to count the PIXEL chips and not the individual lights – unless each individual light has its own chip. This is quite common in strip lighting where one pixel will control multiple lights

Channel Mapping – Basics

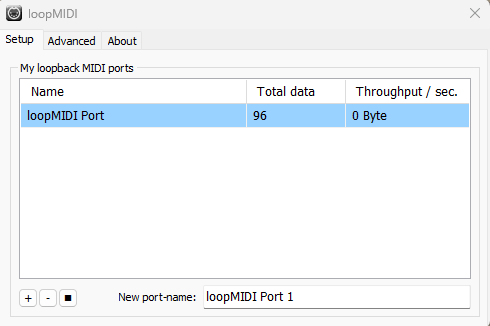

This is another one that seems to confuse people and I think it is mainly to do with the fact that you set it in more than one place, for example if you sequence in Xlights and then move your show to FPP. You also need to make sure you map your channels correctly on your controller.

Channel mapping is basically just telling your software and your controllers how to “talk” to each pixel. For my shows I’ve always used Unicast and issued each controller an IP address.

In Xlights then on the network setup tab you just need to specify the number of universes per controller and the universe size. Most controllers will have a universe size of 510 or 512.

If you are using RGB pixels and have your pixel count to get your total channels you should just times the pixels by 3.

For example if you have 1000 RGB pixels you’ll need 3000 channels to control these.

To figure out how many universes that is you get your channel count and divide it by your universe size:

e.g.

Universe has 510 Channels – 3000/510 = 5.882 Universes

Universe has 512 channels – 3000/512 = 5.8593 Universes

In the above example You then need to round UP to the nearest number to capture all channels. So in Xlights you would add 6 universes for the above example which will capture all 3000 channels (plus a few extra that are unused in the last universe)

Once you have your channel mapping sorted in Xlights you need to make sure that your controllers match this as well – one thing to be aware of though is your number 1 channel does not start again on each controller in Xlights – but on your controller the start channel will be 1.

For example in Xlights your first controller has 6 universes with 510 channels in unicast on IP address 192.168.1.15 – Xlights will show that this is channels 1 to 3060.

On your next controller you add xlights will start ad 3061 – however in your controller its start channel will be Universe 1 – Channel 1. You do not need to make the “number” column in Xlights match your controller using this method.

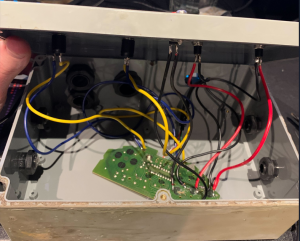

Connecting Pixels to a Controller

Nearly all pixels come with either colour coded wiring OR will have markings on the pixel itself that indicate 12V, DI, GND and on the other side 12V, DO, GND (this is assuming 12VDC pixels for simplicity sake)

Most pixels as well will have an arrow that indicates the direction of travel of signal through the pixels. The side with the arrow facing “in” to the pixel is the side you connect to the controller. If there is no arrow, its the side that has DI (stands for Data input) that your should connect to your controller.

If you get this wrong your pixels WILL not light! It is important to make sure that you also connect them to your controller correctly – if you mix up the wires you may blow a fuse OR blow a pixel chip (or a lot if its a string)

Networking

For your controllers always use private network addresses if you are addressing using unicast (more info on that here: https://www.iplocation.net/public-vs-private-ip-address)

IP addresses are a little like post office boxes, if two devices have the same IP address you get a conflict and no one knows where to actually deliver to.

There are two ways to set up controller IP addresses normally – DHCP or Static.

DHCP refers to a method whereby the controller asks a router or a connected device for an IP address. Although this method works the downside is that the IP address issued by DHCP can change. There is a way to stop this on most routers but the reality is by design DHCP is meant to just give out “an available address” and not the same address as last used.

Static refers to a method whereby the address is specified directly.

The main thing you need to worry about here is that you are using the same IP range and the same subnet – if you don’t your controllers cant be communicated with.

My personal preference is static. Last year I had three controllers with the following IP addresses:

IP: 192.168.0.51 Subnet: 255.255.255.0

IP: 192.168.0.52 Subnet: 255.255.255.0

IP: 192.168.0.53 Subnet: 255.255.255.0

Notice how the subnet is the same for every device and the IP address only the last number changes. Addressing in this way allows you to have up to 254 individual IP addresses that can all talk to each other.

You should connect your devices through a gigabit ethernet switch – and when testing from Xlights make sure that your Xlights computer has the same network addressing otherwise it can not see/get traffic to your controllers.

Null Pixels

The roll of a null pixel is to improve the data signal of from your controller to your first pixel. A lot of confusion seems to arise from this. If you specify a null pixel in your controller it expects that the null pixel or pixels will be inline before your actual pixel 1 on an output. A null pixel on most controllers still takes up channels as it needs to get initial data.

There are a few things you can do to avoid having to use null pixels

1) Put your controllers closer to your props

2) Use different controllers, different controllers seem to have different abilities to send data over greater lenghts

3) Use an appropriate type of wiring

4) Use receiver boards instead of null pixels (these are boards that are kind of like a slave from the first controller on to the next)

Power Injection

Power injection refers to the practice of powering a prop directly from a power supply rather than from a controller. There are benefits to doing this:

1) Most controllers can only supply 4-5A per output (60W if using 12VDC @ 5A) this means you only have a certain amount of wattage available before you are actually overdrawing current and likely to blow a fuse. Power injection allows you to use the maximum power available on a power supply. It is still STRONGLY recommended that you also fuse anything you directly inject with a fuse according to the current draw.

2) In some cases I’ve found that injecting power allowed me to send data a greater length

3) You can power multiple props over multiple controllers using a separate power supply (be sure to tie the negative between your supplies)

If you want to try power injection the simplest way is to not connect your positive from your controller to your prop – instead connect the positive from a power supply directly to the positive on your pixel – THEN – the next bit can be a little tricky, you need to make sure the ground wire of your prop connects to both the ground wire of the PSU and the ground wire of the controllers pixel output. This is because you need both ground and the data wire to send a signal, not just the data wire.

Power Calculation

This one always seemed to stump a lot of people as well. If you are trying to figure out what wattage a prop will draw you can do the below calcualtion

Volts x Amps = Watts e.g. a Meanwell 12VDC 30A PSU to calculate watts is 12 x 30 = 350W

If you know the wattage and what amps then:

Wattage Divided by Voltage. In the meanwell example above that then becomes 350/30 which equals 29.16666A <— they normally round this figure to 30A

Why is this important? If you are making a prop with a lot of lights you need to know how much power you need for the prop to light at full white at 100% – for example if you have a matrix with 1,152 12VDC WS2811 Pixels and you know that each light is 0.29W then we can do the calculation below:

Total Wattage draw – 0.29W x 1152 pixels = 334.08W

Total amp draw = 334.08W / Voltage (assume 12V) = 27.84A

The above two numbers make it easy for you to then decide what type of power supply you may need to run that particular prop.

Personally I find breaking down into sections rather than trying to push power over 1000 lights works a bit better.

Falcon Pi Player (FPP)

Falcon Pi Player is software that runs on a raspberry Pi. This software can take *.FSEQ files from Xlights and run them on a schedule. The FPP software sends the data required out to your controllers to run your light show.

Now that Xlights has xSchedule some people are moving away from FPP and to xSchedule. For me – I like being able to use the low power raspberry Pi’s that I can leave on all the time to run the show – it frees up a computer for me to sequence on that won’t affect the running show.

A special note about FPP – the SD image you download is incredibly simple and easy, older style raspberry Pi images required you to flash in certain ways. The latest FPP builds just need to be dragged and dropped on to an SD card, then you just need to boot the Pi and watch until its fully loaded the first time.

Should I use AC timers to turn off my controllers/props?

This comes down to personal preference – however I normally do this so that during the day none of my controllers or props have power. This is because if there is no electricity flowing then issues such as short circuits can not occur. I saw some posts this year of guys that unfortunately lost some equipment whilst power was on to some gear during the day when they were not home.

You can use any AC style timer – however if you can afford it Belkin make a device called the Wemo Insight which will actually monitor your power draw and allow you to see the state of the device even if you are not home.

Should I worry about water/snow/etc

In reality you should worry about sealing your equipment so that water/snow etc should not cause issues before it rains/snows/etc. However unfortunately sometimes water can still creep in even if you take the steps below.

Precautions you should take to avoid water/dust etc getting into controller boxes or other things are:

1) If using a box for your controller make sure your glands are IP68 rated and when you seal them that they actually seal.

2) Use IP68 rated enclosures – be sure that any o-rings or silicone seals are in place correctly before sealing your boxes

3) Use watertight solder sleeves. If you can’t get these use heatshrink then wrap the heatshrink with corona tape, then put a small layer of insulation tape on to prevent the corona tape sticking to iteself.

4) Use drip loops. Drip loops are simple a loop in a cable so that if water tracks down a cable it falls off at the bottom of the loop and won’t track all the way back to a controller or power supply .

5) Periodically check your enclosures!

This year our show ran every night regardless of heat/snow etc. My controllers were all indoors however. Last year I had one controller outside and after I had everything operational I put a layer of silicone around the box to ensure that the box was water tight. In my area it doesn’t snow so I don’t have to deal with that.

This year I made sure any strip lighting we had cut had the ends siliconed.

I’m just starting out what should I do?

If you are just starting out the simplest thing you can do is buy a power supply, a lighting controller and some pixels. See if you can map them and get them running using Test mode in Xlights.

After you get them running in test mode, make a basic sequence in Xlights and then if you feel comfortable, order more lights and props 😀